First, I've tried importing using simple SQL statements, however, as it has turned out, creating the dimensional tables and connecting proper foreign keys is a non-trivial task. One solution would have been stored procedures, however, it is platform dependent. Also, coding a custom Java app for the sake of importing seemed to be an overkill also, so I've decided to use Pentaho's ETL tool, recently renamed from Kettle to Pentaho Data Integration.

Using an ETL tool has many advantages:

- Database and platform independence: you can apply your scripts to any DB using any OS (unless you do some vendor specific calls)

- Possibly greater performance as the ETL tool can do transformations in parallel (conversion, sorting, selection, etc.)

- Schedulability (the ETL vendor usually has an admin panel to schedule ETL tasks, like Pentaho BI server). Also, nice logging to check that transformations completed successfully.

- Visual design of the transformation

- Hard to test transformations

- Custom transformational steps are doable but not easy (you can use BeanShell, Javascript and similar Java-compatible scripting languages)

- A transformation to create necessary dimensional tables

- A transformation to select and convert business data and connect it to dimensional data using foreign keys (so that the result is a star schema)

First: do we need separate date and time dimensions? This is an ongoing debate and obviously depends on the particulars, however having them separate often has many advantages. One is that it makes your MDX queries easier to write as you don't bump into limitations on having the same dimensions on both rows and columns in case one of your requirements needs that.

Creating a date dimension:

- Add a row mapper, configure it to generate the necessary amount of rows.

- Add a "Get value from sequence" to add an ID to each row.

- Add a Calculator to create separate year, month and day columns (or add dayofweek, etc.)

- Add a table output step to save the data.

Same as above, use a row mapper to generate rows (24 if you only need hours), use "Get value from sequence" to count from 0 to 23.

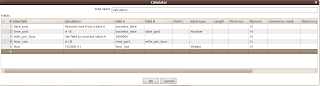

My tranformation looked like this:

Transforming business data

You use the usual transformational steps (filtering, sorting, etc.). The tricky part is how you hook up the data to the dimensional tables.

To do this, use the Lookup -> Database Value Lookup step. The lookup table should be your dimensional table. The key to look up is something you have in both your business data and your dimensional table (eg hour of day). The returned value should be the ID of the dimensional row.

This works flawlessly, as long as you are only working with dates (without time value), as the Calculator step can easily take separate date and time. However, it was not trivial, how to match only part of a time value (hours, minutes, seconds, etc.) as the Calculator step has no way of separating these..

The solution here is to treat a date+time value as a long containing miliseconds and use division, rounding, etc. to take it apart. First, separate the date and time part using the "Remove time from date A" function of Calculator and then do your arithmetic to implement hours, minutes, etc.

The following worked in my case: